ChatGPT is simply the beginning.

With computing now advancing at what he referred to as “lightspeed,” NVIDIA founder and CEO Jensen Huang immediately introduced a broad set of partnerships with Google, Microsoft, Oracle and a spread of main companies that deliver new AI, simulation and collaboration capabilities to each trade.

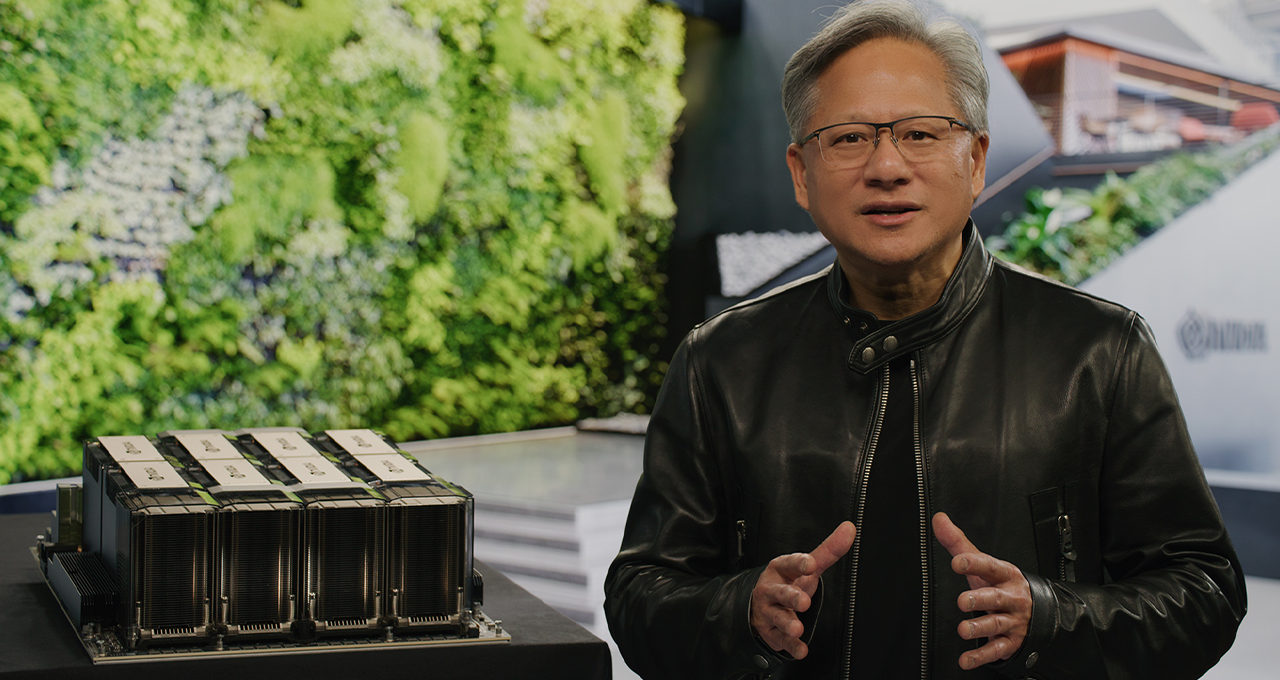

“The warp drive engine is accelerated computing, and the vitality supply is AI,” Huang stated in his keynote on the firm’s GTC convention. “The spectacular capabilities of generative AI have created a way of urgency for corporations to reimagine their merchandise and enterprise fashions.”

In a sweeping 78-minute presentation anchoring the four-day occasion, Huang outlined how NVIDIA and its companions are providing all the things from coaching to deployment for cutting-edge AI providers. He introduced new semiconductors and software program libraries to allow contemporary breakthroughs. And Huang revealed an entire set of techniques and providers for startups and enterprises racing to place these improvements to work on a worldwide scale.

Huang punctuated his discuss with vivid examples of this ecosystem at work. He introduced NVIDIA and Microsoft will join tons of of hundreds of thousands of Microsoft 365 and Azure customers to a platform for constructing and working hyperrealistic digital worlds. He provided a peek at how Amazon is utilizing refined simulation capabilities to coach new autonomous warehouse robots. He touched on the rise of a brand new era of wildly standard generative AI providers comparable to ChatGPT.

And underscoring the foundational nature of NVIDIA’s improvements, Huang detailed how, along with ASML, TSMC and Synopsis, NVIDIA computational lithography breakthroughs will assist make a brand new era of environment friendly, highly effective 2-nm semiconductors potential.

The arrival of accelerated computing and AI come simply in time, with Moore’s Regulation slowing and industries tackling highly effective dynamics —sustainability, generative AI, and digitalization, Huang stated. “Industrial corporations are racing to digitalize and reinvent into software-driven tech corporations — to be the disruptor and never the disrupted,” Huang stated.

Acceleration lets corporations meet these challenges. “Acceleration is one of the simplest ways to reclaim energy and obtain sustainability and Web Zero,” Huang stated.

GTC: The Premier AI Convention

GTC, now in its 14th 12 months, has turn into one of many world’s most vital AI gatherings. This week’s convention options 650 talks from leaders comparable to Demis Hassabis of DeepMind, Valeri Taylor of Argonne Labs, Scott Belsky of Adobe, Paul Debevec of Netflix, Thomas Schulthess of ETH Zurich and a particular fireplace chat between Huang and Ilya Sutskever, co-founder of OpenAI, the creator of ChatGPT.

Greater than 250,000 registered attendees will dig into classes on all the things from restoring the misplaced Roman mosaics of two,000 years in the past to constructing the factories of the long run, from exploring the universe with a brand new era of large telescopes to rearranging molecules to speed up drug discovery, to greater than 70 talks on generative AI.

The iPhone Second of AI

NVIDIA’s applied sciences are basic to AI, with Huang recounting how NVIDIA was there on the very starting of the generative AI revolution. Again in 2016 he hand-delivered to OpenAI the primary NVIDIA DGX AI supercomputer — the engine behind the massive language mannequin breakthrough powering ChatGPT.

Launched late final 12 months, ChatGPT went mainstream virtually instantaneously, attracting over 100 million customers, making it the fastest-growing software in historical past. “We’re on the iPhone second of AI,” Huang stated.

NVIDIA DGX supercomputers, initially used as an AI analysis instrument, at the moment are operating 24/7 at companies the world over to refine knowledge and course of AI, Huang reported. Half of all Fortune 100 corporations have put in DGX AI supercomputers.

“DGX supercomputers are trendy AI factories,” Huang stated.

NVIDIA H100, Grace Hopper, Grace, for Knowledge Facilities

Deploying LLMs like ChatGPT are a big new inference workload, Huang stated. For giant-language-model inference, like ChatGPT, Huang introduced a brand new GPU — the H100 NVL with dual-GPU NVLink.

Primarily based on NVIDIA’s Hopper structure, H100 contains a Transformer Engine designed to course of fashions such because the GPT mannequin that powers ChatGPT. In comparison with HGX A100 for GPT-3 processing, a typical server with 4 pairs of H100 with dual-GPU NVLink is as much as 10x sooner.

“H100 can cut back massive language mannequin processing prices by an order of magnitude,” Huang stated.

In the meantime, over the previous decade, cloud computing has grown 20% yearly right into a $1 trillion trade, Huang stated. NVIDIA designed the Grace CPU for an AI- and cloud-first world, the place AI workloads are GPU accelerated. Grace is sampling now, Huang stated.

NVIDIA’s new superchip, Grace Hopper, connects the Grace CPU and Hopper GPU over a high-speed 900GB/sec coherent chip-to-chip interface. Grace Hopper is right for processing big datasets like AI databases for recommender techniques and enormous language fashions, Huang defined.

“Prospects need to construct AI databases a number of orders of magnitude bigger,” Huang stated. “Grace Hopper is the perfect engine.”

DGX the Blueprint for AI Infrastructure

The most recent model of DGX options eight NVIDIA H100 GPUs linked collectively to work as one big GPU. “NVIDIA DGX H100 is the blueprint for patrons constructing AI infrastructure worldwide,” Huang stated, sharing that NVIDIA DGX H100 is now in full manufacturing.

H100 AI supercomputers are already coming on-line.

Oracle Cloud Infrastructure introduced the restricted availability of latest OCI Compute bare-metal GPU cases that includes H100 GPUs

Moreover, Amazon Net Providers introduced its forthcoming EC2 UltraClusters of P5 cases, which may scale in measurement as much as 20,000 interconnected H100 GPUs.

This follows Microsoft Azure’s personal preview announcement final week for its H100 digital machine, ND H100 v5.

Meta has now deployed its H100-powered Grand Teton AI supercomputer internally for its AI manufacturing and analysis groups.

And OpenAI shall be utilizing H100s on its Azure supercomputer to energy its persevering with AI analysis.

Different companions making H100 accessible embody Cirrascale and CoreWeave, each which introduced basic availability immediately. Moreover, Google Cloud, Lambda, Paperspace and Vult are planning to supply H100.

And servers and techniques that includes NVIDIA H100 GPUs can be found from main server makers together with Atos, Cisco, Dell Applied sciences, GIGABYTE, Hewlett Packard Enterprise, Lenovo and Supermicro.

DGX Cloud: Bringing AI to Each Firm, Immediately

And to hurry DGX capabilities to startups and enterprises racing to construct new merchandise and develop AI methods, Huang introduced NVIDIA DGX Cloud, via partnerships with Microsoft Azure, Google Cloud and Oracle Cloud Infrastructure to deliver NVIDIA DGX AI supercomputers “to each firm, from a browser.”

DGX Cloud is optimized to run NVIDIA AI Enterprise, the world’s main acceleration software program suite for end-to-end improvement and deployment of AI. “DGX Cloud gives prospects the perfect of NVIDIA AI and the perfect of the world’s main cloud service suppliers,” Huang stated.

NVIDIA is partnering with main cloud service suppliers to host DGX Cloud infrastructure, beginning with Oracle Cloud Infrastructure. Microsoft Azure is anticipated to start internet hosting DGX Cloud subsequent quarter, and the service will quickly broaden to Google Cloud and extra.

This partnership brings NVIDIA’s ecosystem to cloud service suppliers whereas amplifying NVIDIA’s scale and attain, Huang stated. Enterprises will be capable of lease DGX Cloud clusters on a month-to-month foundation, guaranteeing they’ll rapidly and simply scale the event of huge, multi-node coaching workloads.

Supercharging Generative AI

To speed up the work of these in search of to harness generative AI, Huang introduced NVIDIA AI Foundations, a household of cloud providers for patrons needing to construct, refine and function customized LLMs and generative AI educated with their proprietary knowledge and for domain-specific duties.

AI Foundations providers embody NVIDIA NeMo for constructing customized language text-to-text generative fashions; Picasso, a visible language model-making service for patrons who need to construct customized fashions educated with licensed or proprietary content material; and BioNeMo, to assist researchers within the $2 trillion drug discovery trade.

Adobe is partnering with NVIDIA to construct a set of next-generation AI capabilities for the way forward for creativity.

Getty Pictures is collaborating with NVIDIA to coach accountable generative text-to-image and text-to-video basis fashions.

Shutterstock is working with NVIDIA to coach a generative text-to-3D basis mannequin to simplify the creation of detailed 3D property.

Accelerating Medical Advances

And NVIDIA introduced Amgen is accelerating drug discovery providers with BioNeMo. As well as, Alchemab Therapeutics, AstraZeneca, Evozyne, Innophore and Insilico are all early entry customers of BioNemo.

BioNeMo helps researchers create, fine-tune and serve customized fashions with their proprietary knowledge, Huang defined.

Huang additionally introduced that NVIDIA and Medtronic, the world’s largest healthcare expertise supplier, are partnering to construct an AI platform for software-defined medical units. The partnership will create a standard platform for Medtronic techniques, starting from surgical navigation to robotic-assisted surgical procedure.

And immediately Medtronic introduced that its GI Genius system, with AI for early detection of colon most cancers, is constructed on NVIDIA Holoscan, a software program library for real-time sensor processing techniques, and can ship across the finish of this 12 months.

“The world’s $250 billion medical devices market is being reworked,” Huang stated.

Dashing Deployment of Generative AI Functions

To assist corporations deploy quickly rising generative AI fashions, Huang introduced inference platforms for AI video, picture era, LLM deployment and recommender inference. They mix NVIDIA’s full stack of inference software program with the most recent NVIDIA Ada, Hopper and Grace Hopper processors — together with the NVIDIA L4 Tensor Core GPU and the NVIDIA H100 NVL GPU, each launched immediately.

• NVIDIA L4 for AI Video can ship 120x extra AI-powered video efficiency than CPUs, mixed with 99% higher vitality effectivity.

• NVIDIA L40 for Picture Era is optimized for graphics and AI-enabled 2D, video and 3D picture era.

• NVIDIA H100 NVL for Giant Language Mannequin Deployment is right for deploying large LLMs like ChatGPT at scale.

• And NVIDIA Grace Hopper for Suggestion Fashions is right for graph advice fashions, vector databases and graph neural networks.

Google Cloud is the primary cloud service supplier to supply L4 to prospects with the launch of its new G2 digital machines, accessible in personal preview immediately. Google can also be integrating L4 into its Vertex AI mannequin retailer.

Microsoft, NVIDIA to Deliver Omniverse to ‘Tons of of Hundreds of thousands’

Unveiling a second cloud service to hurry unprecedented simulation and collaboration capabilities to enterprises, Huang introduced NVIDIA is partnering with Microsoft to deliver NVIDIA Omniverse Cloud, a totally managed cloud service, to the world’s industries.

“Microsoft and NVIDIA are bringing Omnivese to tons of of hundreds of thousands of Microsoft 365 and Azure customers,” Huang stated, additionally unveiling new NVIDIA OVX servers and a brand new era of workstations powered by NVIDIA RTX Ada Era GPUs and Intel’s latest CPUs optimized for NVIDIA Omniverse.

To point out the extraordinary capabilities of Omniverse, NVIDIA’s open platform constructed for 3D design collaboration and digital twin simulation, Huang shared a video displaying how NVIDIA Isaac Sim, NVIDIA’s robotics simulation and artificial era platform, constructed on Omniverse, helps Amazon save money and time with full-fidelity digital twins.

It reveals how Amazon is working to choreograph the actions of Proteus, Amazon’s first absolutely autonomous warehouse robotic, because it strikes bins of merchandise from one place to a different in Amazon’s cavernous warehouses alongside people and different robots.

Digitizing the $3 Trillion Auto Trade

Illustrating the dimensions of Omniverse’s attain and capabilities, Huang dug into Omniverse’s function in digitalizing the $3 trillion auto trade. By 2030, auto producers will construct 300 factories to make 200 million electrical autos, Huang stated, and battery makers are constructing 100 extra megafactories. “Digitalization will improve the trade’s effectivity, productiveness and velocity,” Huang stated.

Relating Omniverse’s adoption throughout the trade, Huang stated Lotus is utilizing Omniverse to just about assemble welding stations. Mercedes-Benz makes use of Omniverse to construct, optimize and plan meeting traces for brand new fashions. Rimac and Lucid Motors use Omniverse to construct digital shops from precise design knowledge that faithfully characterize their vehicles.

Working with Idealworks, BMW makes use of Isaac Sim in Omniverse to generate artificial knowledge and situations to coach manufacturing facility robots. And BMW is utilizing Omniverse to plan operations throughout factories worldwide and is constructing a brand new electric-vehicle manufacturing facility, fully in Omniverse, two years earlier than the plant opens, Huang stated.

Individually. NVIDIA immediately introduced that BYD, the world’s main producer of latest vitality autos NEVs, will prolong its use of the NVIDIA DRIVE Orin centralized compute platform in a broader vary of its NEVs.

Accelerating Semiconductor Breakthroughs

Enabling semiconductor leaders comparable to ASML, TSMC and Synopsis to speed up the design and manufacture of a brand new era of chips as present manufacturing processes close to the boundaries of what physics makes potential, Huang introduced NVIDIA cuLitho, a breakthrough that brings accelerated computing to the sphere of computational lithography.

The brand new NVIDIA cuLitho software program library for computational lithography is being built-in by TSMC, the world’s main foundry, in addition to digital design automation chief Synopsys into their software program, manufacturing processes and techniques for the latest-generation NVIDIA Hopper structure GPUs.

Chip-making gear supplier ASML is working intently with NVIDIA on GPUs and cuLitho, and plans to combine assist for GPUs into all of their computational lithography software program merchandise. With lithography on the limits of physics, NVIDIA’s introduction of cuLitho allows the trade to go to 2nm and past, Huang stated.

“The chip trade is the muse of practically each trade,” Huang stated.

Accelerating the World’s Largest Corporations

Corporations world wide are on board with Huang’s imaginative and prescient.

Telecom big AT&T makes use of NVIDIA AI to extra effectively course of knowledge and is testing Omniverse ACE and the Tokkio AI avatar workflow to construct, customise and deploy digital assistants for customer support and its worker assist desk.

American Categorical, the U.S. Postal Service, Microsoft Workplace and Groups, and Amazon are among the many 40,000 prospects utilizing the high-performance NVIDIA TensorRT inference optimizer and runtime, and NVIDIA Triton, a multi-framework knowledge middle inference serving software program.

Uber makes use of Triton to serve tons of of hundreds of ETA predictions per second.

And with over 60 million each day customers, Roblox makes use of Triton to serve fashions for recreation suggestions, construct avatars, and average content material and market adverts.

Microsoft, Tencent and Baidu are all adopting NVIDIA CV-CUDA for AI laptop imaginative and prescient. The expertise, in open beta, optimizes pre- and post-processing, delivering 4x financial savings in price and vitality.

Serving to Do the Unimaginable

Wrapping up his discuss, Huang thanked NVIDIA’s techniques, cloud and software program companions, in addition to researchers, scientists and staff.

NVIDIA has up to date 100 acceleration libraries, together with cuQuantum and the newly open-sourced CUDA Quantum for quantum computing, cuOpt for combinatorial optimization, and cuLitho for computational lithography, Huang introduced.

The worldwide NVIDIA ecosystem, Huang reported, now spans 4 million builders, 40,000 corporations and 14,000 startups in NVIDIA Inception.

“Collectively,” Huang stated. “We’re serving to the world do the unattainable.”