NVIDIA at this time launched a wave of cutting-edge AI analysis that may allow builders and artists to convey their concepts to life — whether or not nonetheless or transferring, in 2D or 3D, hyperrealistic or fantastical.

Round 20 NVIDIA Analysis papers advancing generative AI and neural graphics — together with collaborations with over a dozen universities within the U.S., Europe and Israel — are headed to SIGGRAPH 2023, the premier laptop graphics convention, happening Aug. 6-10 in Los Angeles.

The papers embody generative AI fashions that flip textual content into personalised pictures; inverse rendering instruments that rework nonetheless pictures into 3D objects; neural physics fashions that use AI to simulate advanced 3D components with beautiful realism; and neural rendering fashions that unlock new capabilities for producing real-time, AI-powered visible particulars.

Improvements by NVIDIA researchers are often shared with builders on GitHub and included into merchandise, together with the NVIDIA Omniverse platform for constructing and working metaverse functions and NVIDIA Picasso, a not too long ago introduced foundry for customized generative AI fashions for visible design. Years of NVIDIA graphics analysis helped convey film-style rendering to video games, just like the not too long ago launched Cyberpunk 2077 Ray Tracing: Overdrive Mode, the world’s first path-traced AAA title.

The analysis developments offered this 12 months at SIGGRAPH will assist builders and enterprises quickly generate artificial knowledge to populate digital worlds for robotics and autonomous automobile coaching. They’ll additionally allow creators in artwork, structure, graphic design, recreation improvement and movie to extra shortly produce high-quality visuals for storyboarding, previsualization and even manufacturing.

AI With a Private Contact: Personalized Textual content-to-Picture Fashions

Generative AI fashions that rework textual content into pictures are highly effective instruments to create idea artwork or storyboards for movies, video video games and 3D digital worlds. Textual content-to-image AI instruments can flip a immediate like “youngsters’s toys” into practically infinite visuals a creator can use for inspiration — producing pictures of stuffed animals, blocks or puzzles.

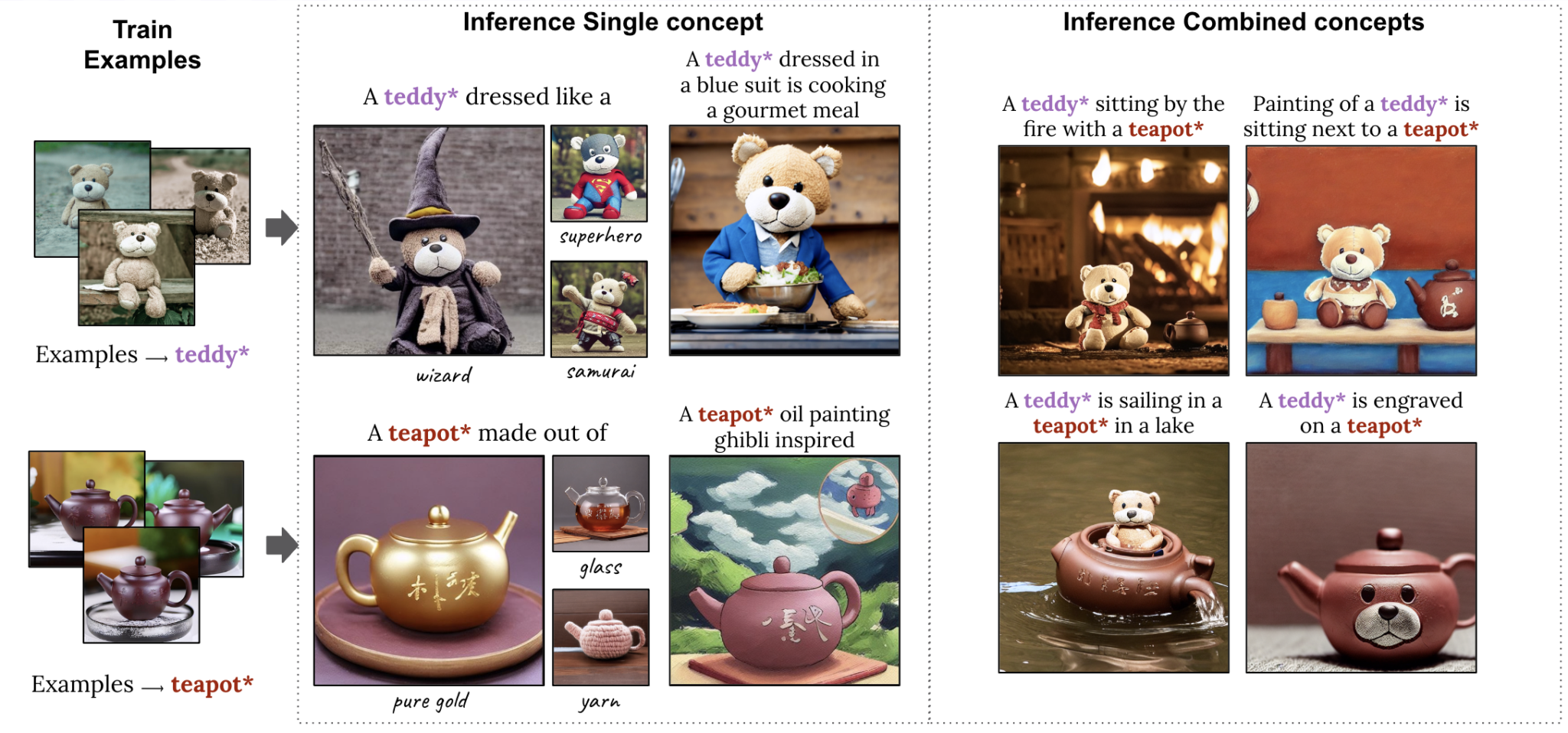

Nonetheless, artists could have a selected topic in thoughts. A inventive director for a toy model, for instance, could possibly be planning an advert marketing campaign round a brand new teddy bear and need to visualize the toy in several conditions, akin to a teddy bear tea occasion. To allow this degree of specificity within the output of a generative AI mannequin, researchers from Tel Aviv College and NVIDIA have two SIGGRAPH papers that allow customers to supply picture examples that the mannequin shortly learns from.

One paper describes a method that wants a single instance picture to customise its output, accelerating the personalization course of from minutes to roughly 11 seconds on a single NVIDIA A100 Tensor Core GPU, greater than 60x sooner than earlier personalization approaches.

A second paper introduces a extremely compact mannequin known as Perfusion, which takes a handful of idea pictures to permit customers to mix a number of personalised components — akin to a selected teddy bear and teapot — right into a single AI-generated visible:

Serving in 3D: Advances in Inverse Rendering and Character Creation

As soon as a creator comes up with idea artwork for a digital world, the following step is to render the setting and populate it with 3D objects and characters. NVIDIA Analysis is inventing AI methods to speed up this time-consuming course of by robotically remodeling 2D pictures and movies into 3D representations that creators can import into graphics functions for additional modifying.

A 3rd paper created with researchers on the College of California, San Diego, discusses tech that may generate and render a photorealistic 3D head-and-shoulders mannequin based mostly on a single 2D portrait — a serious breakthrough that makes 3D avatar creation and 3D video conferencing accessible with AI. The tactic runs in actual time on a client desktop, and might generate a photorealistic or stylized 3D telepresence utilizing solely standard webcams or smartphone cameras.

A fourth mission, a collaboration with Stanford College, brings lifelike movement to 3D characters. The researchers created an AI system that may study a spread of tennis abilities from 2D video recordings of actual tennis matches and apply this movement to 3D characters. The simulated tennis gamers can precisely hit the ball to focus on positions on a digital court docket, and even play prolonged rallies with different characters.

Past the take a look at case of tennis, this SIGGRAPH paper addresses the tough problem of manufacturing 3D characters that may carry out numerous abilities with sensible motion — with out the usage of costly motion-capture knowledge.

Not a Hair Out of Place: Neural Physics Permits Sensible Simulations

As soon as a 3D character is generated, artists can layer in sensible particulars akin to hair — a posh, computationally costly problem for animators.

People have a median of 100,000 hairs on their heads, with every reacting dynamically to a person’s movement and the encompassing setting. Historically, creators have used physics formulation to calculate hair motion, simplifying or approximating its movement based mostly on the sources obtainable. That’s why digital characters in a big-budget movie sport way more detailed heads of hair than real-time online game avatars.

A fifth paper showcases a technique that may simulate tens of hundreds of hairs in excessive decision and in actual time utilizing neural physics, an AI method that teaches a neural community to foretell how an object would transfer in the true world.

The workforce’s novel method for correct simulation of full-scale hair is particularly optimized for contemporary GPUs. It affords important efficiency leaps in comparison with state-of-the-art, CPU-based solvers, decreasing simulation instances from a number of days to merely hours — whereas additionally boosting the standard of hair simulations doable in actual time. This system lastly permits each correct and interactive bodily based mostly hair grooming.

Neural Rendering Brings Movie-High quality Element to Actual-Time Graphics

After an setting is stuffed with animated 3D objects and characters, real-time rendering simulates the physics of sunshine reflecting by means of the digital scene. Latest NVIDIA analysis exhibits how AI fashions for textures, supplies and volumes can ship film-quality, photorealistic visuals in actual time for video video games and digital twins.

NVIDIA invented programmable shading over 20 years in the past, enabling builders to customise the graphics pipeline. In these newest neural rendering innovations, researchers prolong programmable shading code with AI fashions that run deep inside NVIDIA’s real-time graphics pipelines.

In a sixth SIGGRAPH paper, NVIDIA will current neural texture compression that delivers as much as 16x extra texture element with out taking further GPU reminiscence. Neural texture compression can considerably enhance the realism of 3D scenes, as seen within the picture under, which demonstrates how neural-compressed textures (proper) seize sharper element than earlier codecs, the place the textual content stays blurry (middle).

A associated paper introduced final 12 months is now obtainable in early entry as NeuralVDB, an AI-enabled knowledge compression method that decreases by 100x the reminiscence wanted to characterize volumetric knowledge — like smoke, fireplace, clouds and water.

NVIDIA additionally launched at this time extra particulars about neural supplies analysis that was proven in the newest NVIDIA GTC keynote. The paper describes an AI system that learns how mild displays from photoreal, many-layered supplies, decreasing the complexity of those property all the way down to small neural networks that run in actual time, enabling as much as 10x sooner shading.

The extent of realism could be seen on this neural-rendered teapot, which precisely represents the ceramic, the imperfect clear-coat glaze, fingerprints, smudges and even mud.

Extra Generative AI and Graphics Analysis

These are simply the highlights — learn extra about all of the NVIDIA papers at SIGGRAPH. NVIDIA may also current six programs, 4 talks and two Rising Know-how demos on the convention, with subjects together with path tracing, telepresence and diffusion fashions for generative AI.

NVIDIA Analysis has tons of of scientists and engineers worldwide, with groups centered on subjects together with AI, laptop graphics, laptop imaginative and prescient, self-driving vehicles and robotics.