In his first dwell keynote for the reason that pandemic, NVIDIA founder and CEO Jensen Huang as we speak kicked off the COMPUTEX convention in Taipei, saying platforms that corporations can use to trip a historic wave of generative AI that’s remodeling industries from promoting to manufacturing to telecom.

“We’re again,” Huang roared as he took the stage after years of digital keynotes, some from his dwelling kitchen. “I haven’t given a public speech in virtually 4 years — want me luck!”

Talking for practically two hours to a packed home of some 3,500, he described accelerated computing providers, software program and programs which might be enabling new enterprise fashions and making present ones extra environment friendly.

“Accelerated computing and AI mark a reinvention of computing,” stated Huang, whose travels in his hometown over the previous week have been tracked day by day by native media.

In an illustration of its energy, he used the huge 8K wall he spoke in entrance of to indicate a textual content immediate producing a theme music for his keynote, singable as any karaoke tune. Huang, who often bantered with the gang in his native Taiwanese, briefly led the viewers in singing the brand new anthem.

“We’re now on the tipping level of a brand new computing period with accelerated computing and AI that’s been embraced by virtually each computing and cloud firm on this planet,” he stated, noting 40,000 massive corporations and 15,000 startups now use NVIDIA applied sciences with 25 million downloads of CUDA software program final yr alone.

Prime Information Bulletins From the Keynote

A New Engine for Enterprise AI

For enterprises that want the final word in AI efficiency, he unveiled DGX GH200, a large-memory AI supercomputer. It makes use of NVIDIA NVLink to mix as much as 256 NVIDIA GH200 Grace Hopper Superchips right into a single data-center-sized GPU.

The GH200 Superchip, which Jensen stated is now in full manufacturing, combines an energy-efficient NVIDIA Grace CPU with a high-performance NVIDIA H100 Tensor Core GPU in a single superchip.

The DGX GH200 packs an exaflop of efficiency and 144 terabytes of shared reminiscence, practically 500x greater than in a single NVIDIA DGX A100 320GB system. That lets builders construct massive language fashions for generative AI chatbots, advanced algorithms for recommender programs, and graph neural networks used for fraud detection and knowledge analytics.

Google Cloud, Meta and Microsoft are among the many first anticipated to achieve entry to the DGX GH200, which can be utilized as a blueprint for future hyperscale generative AI infrastructure.

“DGX GH200 AI supercomputers combine NVIDIA’s most superior accelerated computing and networking applied sciences to broaden the frontier of AI,” Huang instructed the viewers in Taipei, a lot of whom had lined up exterior the corridor for hours earlier than the doorways opened.

NVIDIA is constructing its personal large AI supercomputer, NVIDIA Helios, coming on-line this yr. It would use 4 DGX GH200 programs linked with NVIDIA Quantum-2 InfiniBand networking to supercharge knowledge throughput for coaching massive AI fashions.

The DGX GH200 varieties the top of tons of of programs introduced on the occasion. Collectively, they’re bringing generative AI and accelerated computing to tens of millions of customers.

Zooming out to the large image, Huang introduced greater than 400 system configurations are coming to market powered by NVIDIA’s newest Hopper, Grace, Ada Lovelace and BlueField architectures. They purpose to sort out essentially the most advanced challenges in AI, knowledge science and excessive efficiency computing.

Acceleration in Each Measurement

To suit the wants of knowledge facilities of each measurement, Huang introduced NVIDIA MGX, a modular reference structure for creating accelerated servers. System makers will use it to rapidly and cost-effectively construct greater than 100 totally different server configurations to swimsuit a variety of AI, HPC and NVIDIA Omniverse purposes.

MGX lets producers construct CPU and accelerated servers utilizing a standard structure and modular elements. It helps NVIDIA’s full line of GPUs, CPUs, knowledge processing models (DPUs) and community adapters in addition to x86 and Arm processors throughout a wide range of air- and liquid-cooled chassis.

QCT and Supermicro would be the first to market with MGX designs showing in August. Supermicro’s ARS-221GL-NR system introduced at COMPUTEX will use the Grace CPU, whereas QCT’s S74G-2U system, additionally introduced on the occasion, makes use of Grace Hopper.

ASRock Rack, ASUS, GIGABYTE and Pegatron may even use MGX to create next-generation accelerated computer systems.

5G/6G Requires Grace Hopper

Individually, Huang stated NVIDIA helps form future 5G and 6G wi-fi and video communications. A demo confirmed how AI operating on Grace Hopper will remodel as we speak’s 2D video calls into extra lifelike 3D experiences, offering an incredible sense of presence.

Laying the groundwork for brand spanking new sorts of providers, Huang introduced NVIDIA is working with telecom big SoftBank to construct a distributed community of knowledge facilities in Japan. It would ship 5G providers and generative AI purposes on a standard cloud platform.

The info facilities will use NVIDIA GH200 Superchips and NVIDIA BlueField-3 DPUs in modular MGX programs in addition to NVIDIA Spectrum Ethernet switches to ship the extremely exact timing the 5G protocol requires. The platform will scale back value by rising spectral effectivity whereas lowering vitality consumption.

The programs will assist SoftBank discover 5G purposes in autonomous driving, AI factories, augmented and digital actuality, pc imaginative and prescient and digital twins. Future makes use of may even embody 3D video conferencing and holographic communications.

Turbocharging Cloud Networks

Individually, Huang unveiled NVIDIA Spectrum-X, a networking platform purpose-built to enhance the efficiency and effectivity of Ethernet-based AI clouds. It combines Spectrum-4 Ethernet switches with BlueField-3 DPUs and software program to ship 1.7x positive factors in AI efficiency and energy effectivity over conventional Ethernet materials.

NVIDIA Spectrum-X, Spectrum-4 switches and BlueField-3 DPUs can be found now from system makers together with Dell Applied sciences, Lenovo and Supermicro.

Bringing Sport Characters to Life

Generative AI impacts how folks play, too.

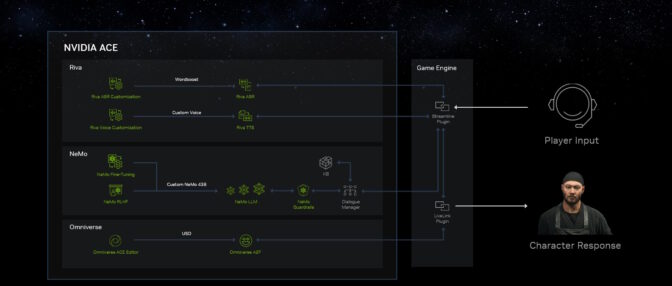

Huang introduced NVIDIA Avatar Cloud Engine (ACE) for Video games, a foundry service builders can use to construct and deploy customized AI fashions for speech, dialog and animation. It would give non-playable characters conversational expertise to allow them to reply to questions with lifelike personalities that evolve.

NVIDIA ACE for Video games contains AI basis fashions corresponding to NVIDIA Riva to detect and transcribe the participant’s speech. The textual content prompts NVIDIA NeMo to generate custom-made responses animated with NVIDIA Omniverse Audio2Face.

Accelerating Gen AI on Home windows

Huang described how NVIDIA and Microsoft are collaborating to drive innovation for Home windows PCs within the generative AI period.

New and enhanced instruments, frameworks and drivers are making it simpler for PC builders to develop and deploy AI. For instance, the Microsoft Olive toolchain for optimizing and deploying GPU-accelerated AI fashions and new graphics drivers will enhance DirectML efficiency on Home windows PCs with NVIDIA GPUs.

The collaboration will improve and lengthen an put in base of 100 million PCs sporting RTX GPUs with Tensor Cores that enhance efficiency of greater than 400 AI-accelerated Home windows apps and video games.

Digitizing the World’s Largest Industries

Generative AI can be spawning new alternatives within the $700 billion digital promoting trade.

For instance, WPP, the world’s largest advertising and marketing providers group, is working with NVIDIA to construct a first-of-its variety generative AI-enabled content material engine on Omniverse Cloud.

In a demo, Huang confirmed how artistic groups will join their 3D design instruments corresponding to Adobe Substance 3D, to construct digital twins of shopper merchandise in NVIDIA Omniverse. Then, content material from generative AI instruments skilled on responsibly sourced knowledge and constructed with NVIDIA Picasso will allow them to rapidly produce digital units. WPP shoppers can then use the whole scene to generate a number of adverts, movies and 3D experiences for world markets and customers to expertise on any net system.

“Right now adverts are retrieved, however sooner or later once you interact info a lot of will probably be generated — the computing mannequin has modified,” Huang stated.

Factories Forge an AI Future

With an estimated 10 million factories, the $46 trillion manufacturing sector is a wealthy discipline for industrial digitalization.

“The world’s largest industries make bodily issues. Constructing them digitally first can save billions,” stated Huang.

The keynote confirmed how electronics makers together with Foxconn Industrial Web, Innodisk, Pegatron, Quanta and Wistron are forging digital workflows with NVIDIA applied sciences to comprehend the imaginative and prescient of a completely digital sensible manufacturing unit.

They’re utilizing Omniverse and generative AI APIs to attach their design and manufacturing instruments to allow them to construct digital twins of factories. As well as, they use NVIDIA Isaac Sim for simulating and testing robots and NVIDIA Metropolis, a imaginative and prescient AI framework, for automated optical inspection.

The newest element, NVIDIA Metropolis for Factories, can create customized quality-control programs, giving producers a aggressive benefit. It’s serving to corporations develop state-of-the-art AI purposes.

AI Speeds Meeting Traces

For instance, Pegatron — which makes 300 merchandise worldwide, together with laptops and smartphones — is creating digital factories with Omniverse, Isaac Sim and Metropolis. That lets it check out processes in a simulated setting, saving time and price.

Pegatron additionally used the NVIDIA DeepStream software program improvement package to develop clever video purposes that led to a 10x enchancment in throughput.

Foxconn Industrial Web, a service arm of the world’s largest expertise producer, is working with NVIDIA Metropolis companions to automate vital parts of its circuit-board quality-assurance inspection factors.

In a video, Huang confirmed how Techman Robotic, a subsidiary of Quanta, tapped NVIDIA Isaac Sim to optimize inspection on the Taiwan-based big’s manufacturing strains. It’s primarily utilizing simulated robots to coach robots easy methods to make higher robots.

As well as, Huang introduced a brand new platform to allow the subsequent technology of autonomous cellular robotic (AMR) fleets. Isaac AMR helps simulate, deploy and handle fleets of autonomous cellular robots.

A big companion ecosystem — together with ADLINK, Aetina, Deloitte, Quantiphi and Siemens — helps convey all these manufacturing options to market, Huang stated.

It’s yet one more instance of how NVIDIA helps corporations really feel the advantages of generative AI with accelerated computing.

“It’s been a very long time since I’ve seen you, so I had rather a lot to inform you,” he stated after the two-hour speak to enthusiastic applause.

To study extra, watch the full keynote.