Generative AI is quickly ushering in a brand new period of computing for productiveness, content material creation, gaming and extra. Generative AI fashions and functions — like NVIDIA NeMo and DLSS 3 Body Technology, Meta LLaMa, ChatGPT, Adobe Firefly and Secure Diffusion — use neural networks to establish patterns and constructions inside present knowledge to generate new and authentic content material.

When optimized for GeForce RTX and NVIDIA RTX GPUs, which provide as much as 1,400 Tensor TFLOPS for AI inferencing, generative AI fashions can run as much as 5x sooner than on competing units. That is because of Tensor Cores — devoted {hardware} in RTX GPUs constructed to speed up AI calculations — and common software program enhancements. Enhancements launched final week on the Microsoft Construct convention doubled efficiency for generative AI fashions, similar to Secure Diffusion, that make the most of new DirectML optimizations.

As extra AI inferencing occurs on native units, PCs will want highly effective but environment friendly {hardware} to assist these complicated duties. To fulfill this want, RTX GPUs will add Max-Q low-power inferencing for AI workloads. The GPU will function at a fraction of the ability for lighter inferencing duties, whereas scaling as much as unmatched ranges of efficiency for heavy generative AI workloads.

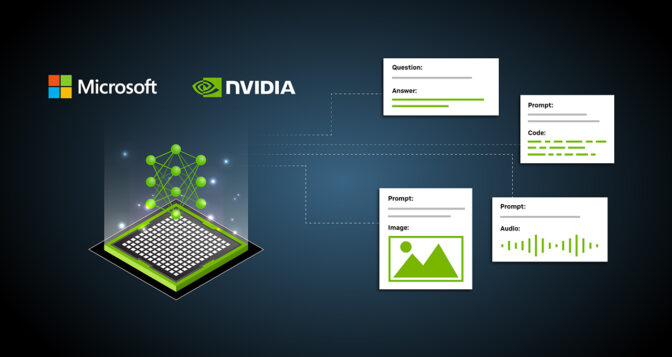

To create new AI functions, builders can now entry a whole RTX-accelerated AI improvement stack operating on Home windows 11, making it simpler to develop, practice and deploy superior AI fashions. This begins with improvement and fine-tuning of fashions with optimized deep studying frameworks accessible through Home windows Subsystem for Linux.

Builders can then transfer seamlessly to the cloud to coach on the identical NVIDIA AI stack, which is offered from each main cloud service supplier. Subsequent, builders can optimize the skilled fashions for quick inferencing with instruments just like the new Microsoft Olive. And at last, they will deploy their AI-enabled functions and options to an set up base of over 100 million RTX PCs and workstations which have been optimized for AI.

“AI would be the single largest driver of innovation for Home windows clients within the coming years,” stated Pavan Davuluri, company vice chairman of Home windows silicon and system integration at Microsoft. “By working in live performance with NVIDIA on {hardware} and software program optimizations, we’re equipping builders with a transformative, high-performance, easy-to-deploy expertise.”

Thus far, over 400 RTX AI-accelerated apps and video games have been launched, with extra on the best way.

Throughout his keynote tackle kicking off COMPUTEX 2023, NVIDIA founder and CEO Jensen Huang launched a brand new generative AI to assist sport improvement, NVIDIA Avatar Cloud Engine (ACE) for Video games.

This tradition AI mannequin foundry service transforms video games by bringing intelligence to non-playable characters by AI-powered pure language interactions. Builders of middleware, instruments and video games can use ACE for Video games to construct and deploy personalized speech, dialog and animation AI fashions of their software program and video games.

Generative AI on RTX, Anyplace

From servers to the cloud to units, generative AI operating on RTX GPUs is all over the place. NVIDIA’s accelerated AI computing is a low-latency, full-stack endeavor. We’ve been optimizing each a part of our {hardware} and software program structure for a few years for AI, together with fourth-generation Tensor Cores — devoted AI {hardware} on RTX GPUs.

Common driver optimizations guarantee peak efficiency. The newest NVIDIA driver, mixed with Olive-optimized fashions and updates to DirectML, delivers vital speedups for builders on Home windows 11. For instance, Secure Diffusion efficiency is improved by 2x in comparison with the earlier interference occasions for builders benefiting from DirectML optimized paths.

And with the most recent technology of RTX laptops and cell workstations constructed on the NVIDIA Ada Lovelace structure, customers can take generative AI wherever. Our next-gen cell platform brings new ranges of efficiency and portability — in type components as small as 14 inches and as light-weight as about three kilos. Makers like Dell, HP, Lenovo and ASUS are pushing the generative AI period ahead, backed by RTX GPUs and Tensor Cores.

“As AI continues to get deployed throughout industries at an anticipated annual progress fee of over 37% now by 2030, companies and customers will more and more want the appropriate expertise to develop and implement AI, together with generative AI. Lenovo is uniquely positioned to empower generative AI spanning from units to servers to the cloud, having developed merchandise and options for AI workloads for years. Our NVIDIA RTX GPU-powered PCs, similar to choose Lenovo ThinkPad, ThinkStation, ThinkBook, Yoga, Legion and LOQ units, are enabling the transformative wave of generative AI for higher on a regular basis consumer experiences in saving time, creating content material, getting work carried out, gaming and extra.” — Daryl Cromer, vice chairman and chief expertise officer of PCs and Sensible Units at Lenovo

“Generative AI is transformative and a catalyst for future innovation throughout industries. Collectively, HP and NVIDIA equip builders with unbelievable efficiency, mobility and the reliability wanted to run accelerated AI fashions at this time, whereas powering a brand new period of generative AI.” — Jim Nottingham, senior vice chairman and basic supervisor of Z by HP

“Our current work with NVIDIA on Venture Helix facilities on making it simpler for enterprises to construct and deploy reliable generative AI on premises. One other step on this historic second is bringing generative AI to PCs. Consider app builders seeking to good neural community algorithms whereas protecting coaching knowledge and IP beneath native management. That is what our highly effective and scalable Precision workstations with NVIDIA RTX GPUs are designed to do. And because the international chief in workstations, Dell is uniquely positioned to assist customers securely speed up AI functions from the sting to the datacenter.” — Ed Ward, president of the consumer product group at Dell Applied sciences

“The generative AI period is upon us, requiring immense processing and totally optimized {hardware} and software program. With the NVIDIA AI platform, together with NVIDIA Omniverse, which is now preinstalled on lots of our merchandise, we’re excited to see the AI revolution proceed to take form on ASUS and ROG laptops.” — Galip Fu, director of worldwide shopper advertising and marketing at ASUS

Quickly, laptops and cell workstations with RTX GPUs will get the very best of each worlds. AI inference-only workloads can be optimized for Tensor Core efficiency whereas protecting energy consumption of the GPU as little as doable, extending battery life and sustaining a cool, quiet system. The GPU can then dynamically scale up for optimum AI efficiency when the workload calls for it.

Builders also can learn to optimize their functions end-to-end to take full benefit of GPU-acceleration through the NVIDIA AI for accelerating functions developer web site.